Last updated on

Managing a vast website boasting over 10,000 pages presents unique SEO challenges that demand special attention.

While conventional tools and strategies like keyword optimization and link building remain pivotal for establishing a robust SEO foundation and upholding fundamental hygiene practices, they may not fully tackle the intricate technicalities of Searchbot Site Visibility and the evolving requisites of a sprawling enterprise website.

This is precisely where the significance of log analyzers emerges. An SEO log analyzer diligently monitors and dissects server access logs, providing invaluable insights into the interactions between search engines and your website. Armed with these insights, you can strategically optimize your website to cater to both search crawlers and users, thereby maximizing the returns on your SEO endeavors.

In this article, we’ll delve into the essence of a log analyzer and explore how it can empower your enterprise SEO strategy to achieve enduring success. But before we dive in, let’s briefly examine the intricacies that make SEO management challenging for large-scale websites with thousands of pages.

Managing SEO for a website boasting over 10,000 pages isn’t simply a matter of scaling up; it’s an entirely different playing field.

Relying solely on traditional SEO tactics can severely constrain your site’s potential for organic growth. Even if your pages boast impeccable titles and content, if Googlebot struggles to effectively crawl them, they risk being overlooked and may never achieve favorable rankings.

In the realm of large websites, the sheer volume of content and pages presents a formidable challenge in ensuring that every important page receives optimal visibility to Googlebot. Moreover, the intricate nature of site architecture often exacerbates crawl budget issues, resulting in crucial pages being overlooked during Googlebot’s crawls.

Moreover, large websites are particularly susceptible to technical glitches, such as unexpected code alterations from the development team, which can significantly affect SEO performance. These issues often compound other challenges, including sluggish page loading times due to extensive content, bulk broken links, or the presence of redundant pages competing for the same keywords (known as keyword cannibalization).

In essence, the scale-related issues necessitate a more comprehensive SEO approach. One that can dynamically adapt to the complexities of large websites and ensure that each optimization effort contributes meaningfully to the overarching objective of enhancing visibility and attracting traffic.

This strategic evolution underscores the importance of leveraging an SEO log analyzer, which furnishes detailed insights crucial for prioritizing impactful actions. Central to this approach is gaining a deeper understanding of Googlebot’s behavior, treating it as the primary user of your website. Until your essential pages are efficiently accessed by Googlebot, they will struggle to rank and attract traffic.

An SEO log analyzer serves as a tool that sifts through and evaluates the data produced by web servers each time a page is accessed. Its primary function is to monitor the interaction between search engine crawlers and a website, offering invaluable insights into the mechanics behind the scenes. Through careful analysis, it can pinpoint which pages are being crawled, their frequency, and flag any potential crawl issues, such as instances where Googlebot fails to access crucial pages.

By delving into these server logs, log analyzers provide SEO teams with a clear picture of how a website is perceived by search engines. This empowers them to make precise adjustments aimed at improving site performance, streamlining crawl processes, and ultimately enhancing visibility on search engine results pages (SERPs).

In essence, a thorough examination of log data uncovers opportunities and identifies issues that might otherwise remain hidden, especially within larger websites.

But why prioritize treating Googlebot as the paramount visitor, and what makes crawl budget such a significant factor? Let’s delve deeper into these questions.

Crawl budget denotes the number of pages a search engine bot, like Googlebot, will traverse on your site within a specified period. Once a site exhausts its budget, the bot ceases crawling and moves on to other websites.

The allocation of crawl budgets varies for each website and is determined by Google, taking into account factors such as site size, performance, update frequency, and links. By strategically optimizing these elements, you can enhance your crawl budget, expediting the ranking process for new website pages and content.

Maximizing this budget ensures that your crucial pages receive frequent visits and indexing by Googlebot. This often leads to improved rankings, assuming your content and user experience meet high standards.

This is where a log analyzer tool proves invaluable by furnishing detailed insights into the interaction between crawlers and your site. As previously mentioned, it enables you to discern which pages are being traversed and with what frequency, aiding in the identification and resolution of inefficiencies such as low-value or irrelevant pages that squander valuable crawl resources.

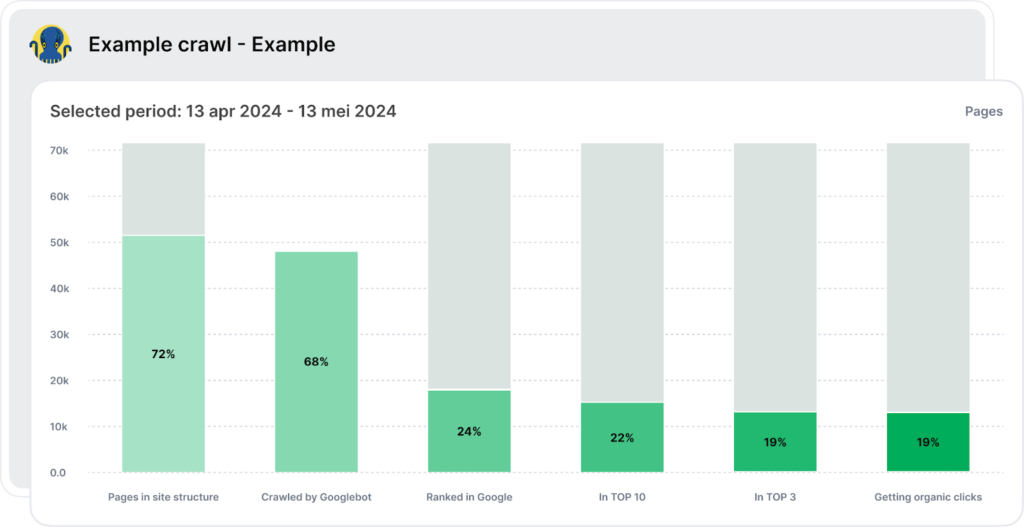

An advanced log analyzer like JetOctopus provides a comprehensive overview of all stages, from crawling and indexation to organic clicks. Its SEO Funnel encompasses every pivotal stage, from your website’s encounter with Googlebot to achieving a top 10 ranking and attracting organic traffic.

As illustrated above, the tabular view displays the ratio of pages open to indexation versus those closed from indexation. Grasping this ratio is paramount because if commercially significant pages are excluded from indexation, they will be absent from subsequent funnel stages.

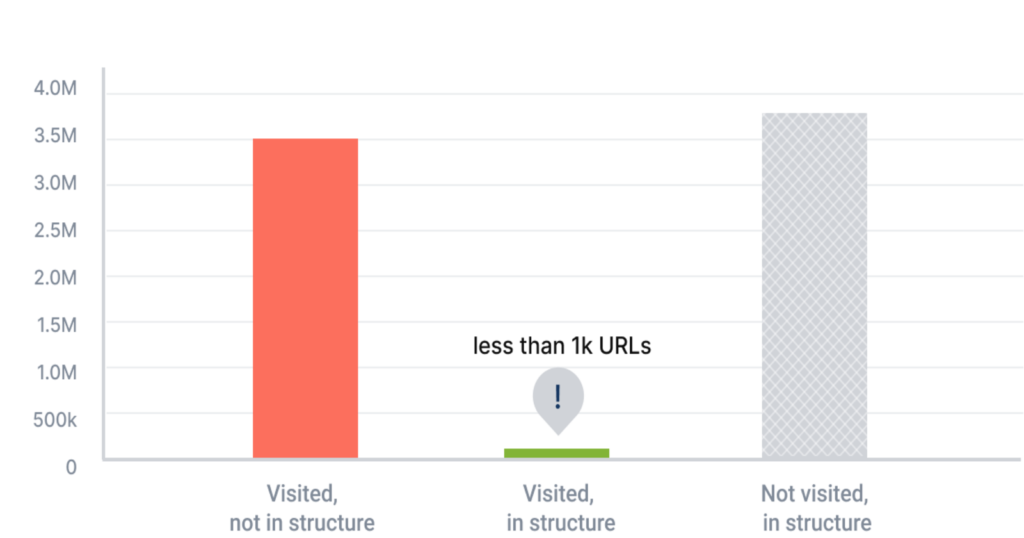

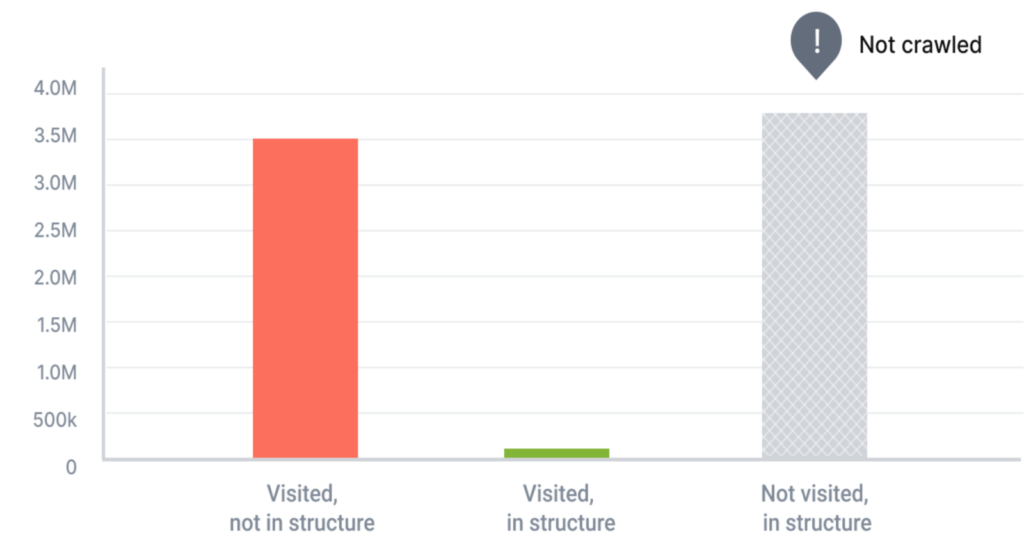

The subsequent stage entails evaluating the number of pages crawled by Googlebot, where “green pages” denote those within the site’s structure and successfully crawled, while “gray pages” suggest potential crawl budget wastage as they are visited by Googlebot but lie outside the structure, possibly comprising orphan pages or inadvertently excluded pages. Thus, scrutinizing this aspect of your crawl budget is crucial for optimization.

Further stages involve assessing the percentage of pages ranked in Google SERPs, the proportion within the top 10 or top three rankings, and ultimately, the count of pages receiving organic clicks.

In essence, the SEO funnel furnishes precise metrics, accompanied by links to lists of URLs for deeper examination, such as distinguishing indexable from non-indexable pages and pinpointing instances of crawl budget wastage. It serves as a robust starting point for crawl budget analysis, offering a means to visualize the overall landscape and garner insights essential for crafting an impactful optimization strategy that drives tangible SEO growth.

In simple terms, prioritizing high-value pages—ensuring they are error-free and easily accessible to search bots—can significantly enhance your site’s visibility and ranking.

Employing an SEO log analyzer enables you to pinpoint precisely what needs optimization on pages overlooked by crawlers. By addressing these issues, you can attract Googlebot visits. Additionally, a log analyzer aids in optimizing other critical aspects of your website:

Moreover, historical log data provided by a log analyzer can be immensely valuable. It not only enhances the comprehensibility of your SEO performance but also renders it more predictable. By scrutinizing past engagements, you can identify patterns, forecast potential challenges, and devise more efficacious SEO strategies.

With JetOctopus, you enjoy the advantage of unrestricted volume limits on logs, facilitating thorough analysis without the concern of overlooking pivotal data. This approach is essential for consistently refining your strategy and maintaining your site’s prominent position in the rapidly evolving realm of search.

Numerous prominent websites across different sectors have harnessed the power of log analyzers to secure and uphold prime positions on Google for lucrative keywords, thereby significantly fueling their business expansion.

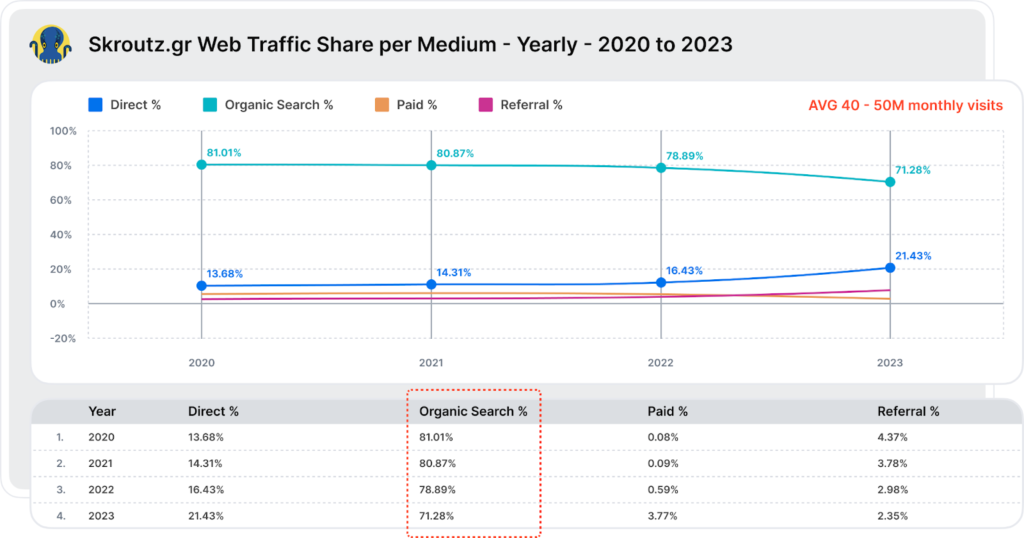

Take Skroutz, Greece’s largest marketplace platform boasting over 1 million daily sessions, for instance. They implemented a cutting-edge real-time crawl and log analyzer tool, enabling them to glean valuable insights such as:

This capability to access real-time visualization tables and historical log data spanning over ten months empowered Skroutz to pinpoint crawling inefficiencies and trim down its index size, thereby optimizing its crawl budget.

Consequently, they observed a reduction in the time taken for new URLs to be indexed and ranked. Instead of the conventional 2-3 months, this phase now merely spanned a few days.

This tactical utilization of log files for technical SEO has been instrumental in solidifying Skroutz’s status as one of the top 1000 global websites, as per SimilarWeb. Additionally, it has positioned them as the fourth most visited website in Greece, trailing only behind giants like Google, Facebook, and Youtube, with over 70% of its traffic stemming from organic search.

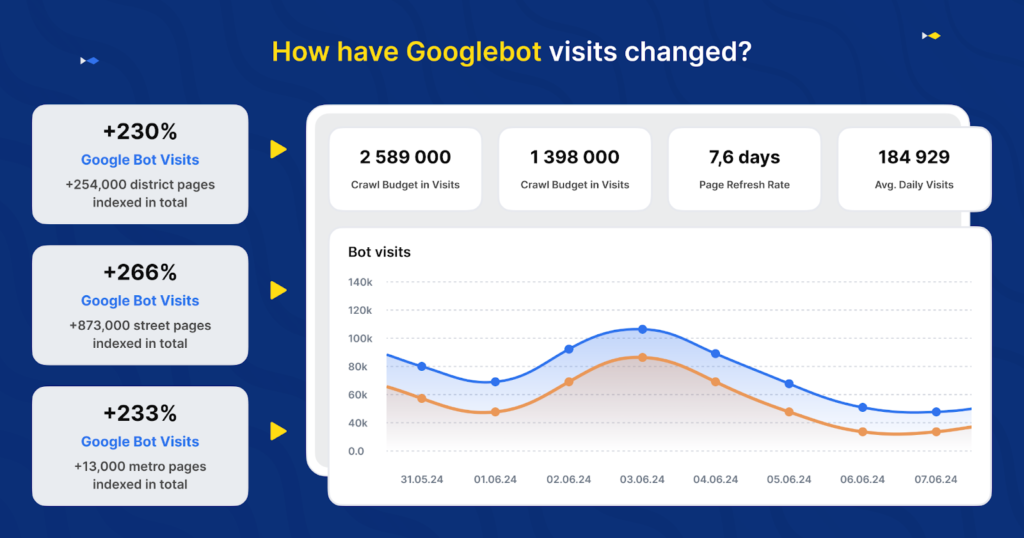

Another compelling example is DOM.RIA, Ukraine’s renowned platform for real estate and rental listings. By enhancing their website’s crawl efficiency, they managed to double Googlebot visits. Given the vast and intricate structure of their site, optimizing crawl efficiency was paramount to ensuring the freshness and relevance of content displayed on Google.

Initially, they revamped their sitemap to enhance the indexing of deeper directories. However, this alone didn’t significantly boost Googlebot visits.

Subsequently, leveraging JetOctopus to scrutinize their log files, DOM.RIA pinpointed and remedied issues related to internal linking and DFI (Dynamic Frequency Increase). They devised mini-sitemaps for poorly scanned directories, such as those for specific cities, encompassing URLs for streets, districts, metro stations, and so forth. Additionally, they incorporated meta tags with links to pages frequented by Googlebot. This strategic shift yielded an over twofold surge in Googlebot activity on these pivotal pages within a mere two weeks.

Now that you’re familiar with the concept of a log analyzer and its significance for large websites, let’s explore the steps involved in logs analysis.

Here’s a breakdown of using an SEO log analyzer like JetOctopus for your website:

By following these steps, you can ensure that search engines efficiently index your most crucial content.

Clearly, employing log analyzers strategically transcends mere technicality; it’s a vital component for large-scale websites. Enhancing your site’s crawl efficiency through a log analyzer can profoundly influence your visibility on Search Engine Results Pages (SERPs).

For Chief Marketing Officers (CMOs) overseeing extensive websites, adopting a log analyzer and crawler toolkit such as JetOctopus equates to acquiring an additional tech SEO analyst. This tool serves as a bridge between integrating SEO data and fostering organic traffic growth.

Original news from SearchEngineJournal